As a private and technical person, it is common to be afraid of the data capturing capabilities of current LLM models. Because of this, I decided to create a small post explaining how to set up your own LLM workspace in your own computer, so you can still can use the latest LLMs without being worried about being spied.

Basic requirements

Notice that one of the downsides of LLMs is that they require somewhat expensive hardware to run on. The good news is that most modern gaming GPUs (even the gaming ones) are capable of running most of the models. So, if you happen to have one of the latest GPUs, you very likely will be able to run LLMs.

Installing software

- Start by installing NVIDIA drivers. For generic use:

sudo ubuntu-drivers install - Restart computer

- Check that Nvidia drivers are installed by typing the following in terminal:

nvidia-smi - Install CUDA:

sudo apt install nvidia-cuda-toolkit - Check that CUDA installation was succesfull by typing the following in terminal:

nvcc --version - Install Ollama tool by typing:

curl https://ollama.ai/install.sh | sh

Running the LLMs

- Decide which LLM model you want to use (Check available LLM models here). (Note that you may be limited based on the available RAM).

- Start Ollama server by typing the following in a terminal:

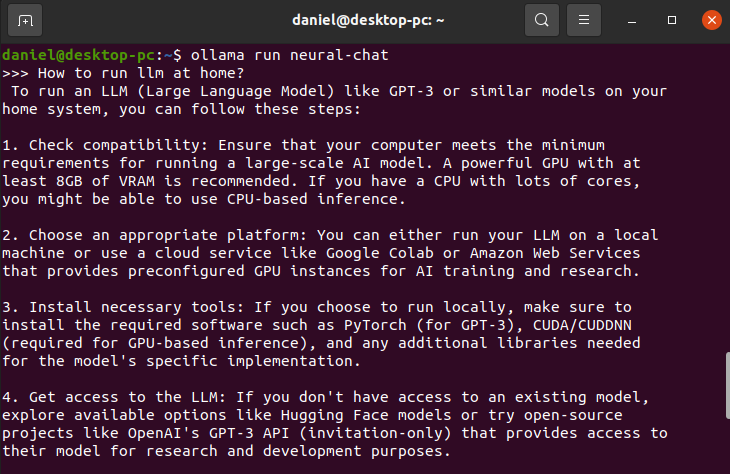

ollama serve - Run the model by typing the following in a terminal:

ollama run {llm-name}(example:ollama run llama3)

Using LLMs via Python

import ollama

# Generate prompt

prompt = f'How to run llm at home?'

# Request response LLM

response = ollama.generate(model=model, prompt=prompt, options={'temperature': 0})

print(response['response'])