As a data engineer is quite common to be on the lookup for useful (or at least interesting) websites to scrape data from.

However, it is quite common to have different projects to start the same (e.g. downloading the HTML from the internet) but to end up processing them (ETL) in different ways.

Because of this, I have setup a simple Raspberry Pi as my centralized webscraping service where:

- Websites, files, … can be downloaded into a raw format.

- Raw files processing can be done in the right order.

- Data is made available for analytics/reporting/…

- Monitoring capabilities are available.

1. Requirements

For this to work, I identified the following tools as basic requirements:

- Aiflow (Orchestration tool): As each project’s logic may be different (how and in which order files need to be processed), an orchestration tool is needed to keep track of the status of each project as well as ensure the right order of execution.

- Selenium (Browser automation tool): As most of the projects will require of downloading data from the web, a browser automation tool is needed to avoid spawning the same browser automation service.

- Docker compose: To keep everything consistent, isolated and easily replicable, I will be setting everything via a single Docker compose file. This allows me to easily manage all services, as well as ensure the services are discoverable between them (as docker attaches ALL the containers in the SAME DOCKER COMPOSE FILE to the SAME NETWORK).

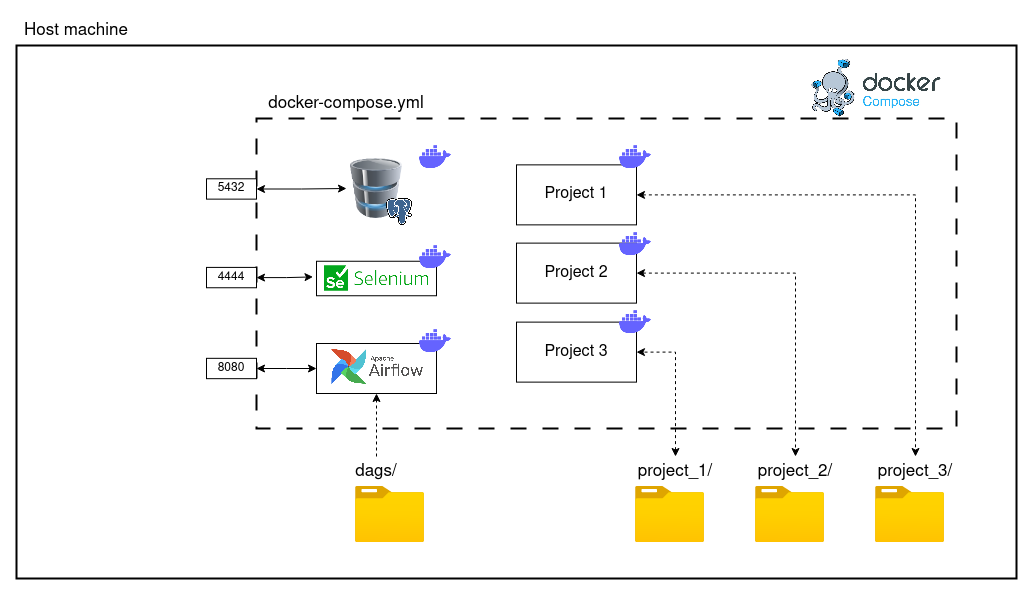

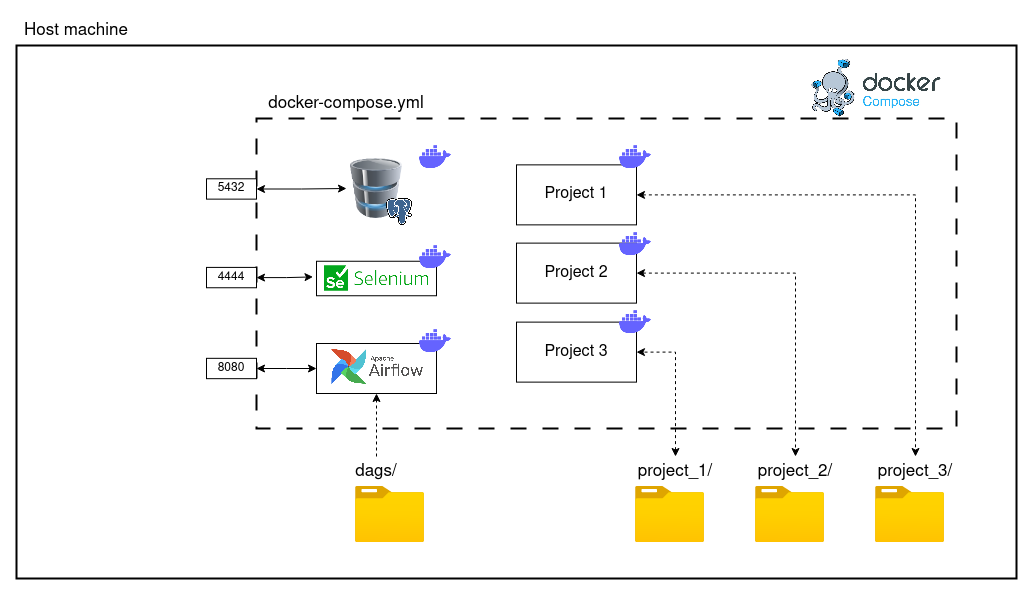

2. Webscraping service architecture

As the webscraping service will be installed in a single host (for the time being at least), the architecture will remain very simple. Find below an overview of the architecture:

(Note that my idea is to modify the architecture to a micro-service oriented architecture once the amount of projects starts becoming bigger. However, for the time being the proposed architecture should be enough for my needs, as a micro-service architecture raises unique challenges (registering the services, making the services discoverable, managing different docker instances, …).)

2.1 Design choices

I will like to keep Airflow as independent as possible, just adding copying the dags to its folder from the original repository. I decided to go for this approach, because as the number of projects increases, it will become very hard to know where a particular dag belongs to, and as each project contains its own order/logic, it is smart to keep as close as possible to the source code.

3. Setting the service

As mentioned in the introduction section, as all the services will be living in the same host machine, one docker compose file should be enough to setup everything correctly. However, certain steps must be followed before starting up the docker containers.

3.1. Preparation

3.1.1. Preparing Airflow

I will start by cding to the location where Airflow installation will reside. Once there, I will start by creating the following file:

docker-compose.yml

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#

# Basic Airflow cluster configuration for CeleryExecutor with Redis and PostgreSQL.

#

# WARNING: This configuration is for local development. Do not use it in a production deployment.

#

# This configuration supports basic configuration using environment variables or an .env file

# The following variables are supported:

#

# AIRFLOW_IMAGE_NAME - Docker image name used to run Airflow.

# Default: apache/airflow:2.7.3

# AIRFLOW_UID - User ID in Airflow containers

# Default: 50000

# AIRFLOW_PROJ_DIR - Base path to which all the files will be volumed.

# Default: .

# Those configurations are useful mostly in case of standalone testing/running Airflow in test/try-out mode

#

# _AIRFLOW_WWW_USER_USERNAME - Username for the administrator account (if requested).

# Default: airflow

# _AIRFLOW_WWW_USER_PASSWORD - Password for the administrator account (if requested).

# Default: airflow

# _PIP_ADDITIONAL_REQUIREMENTS - Additional PIP requirements to add when starting all containers.

# Use this option ONLY for quick checks. Installing requirements at container

# startup is done EVERY TIME the service is started.

# A better way is to build a custom image or extend the official image

# as described in https://airflow.apache.org/docs/docker-stack/build.html.

# Default: ''

#

# Feel free to modify this file to suit your needs.

---

version: '3.8'

x-airflow-common:

&airflow-common

# In order to add custom dependencies or upgrade provider packages you can use your extended image.

# Comment the image line, place your Dockerfile in the directory where you placed the docker-compose.yaml

# and uncomment the "build" line below, Then run `docker-compose build` to build the images.

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:2.7.3}

# build: .

environment:

&airflow-common-env

AIRFLOW__CORE__EXECUTOR: CeleryExecutor

AIRFLOW__DATABASE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

# For backward compatibility, with Airflow <2.3

AIRFLOW__CORE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__RESULT_BACKEND: db+postgresql://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__BROKER_URL: redis://:@redis:6379/0

AIRFLOW__CORE__FERNET_KEY: ''

AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION: 'true'

AIRFLOW__CORE__LOAD_EXAMPLES: 'false'

AIRFLOW__API__AUTH_BACKENDS: 'airflow.api.auth.backend.basic_auth,airflow.api.auth.backend.session'

# yamllint disable rule:line-length

# Use simple http server on scheduler for health checks

# See https://airflow.apache.org/docs/apache-airflow/stable/administration-and-deployment/logging-monitoring/check-health.html#scheduler-health-check-server

# yamllint enable rule:line-length

AIRFLOW__SCHEDULER__ENABLE_HEALTH_CHECK: 'true'

# WARNING: Use _PIP_ADDITIONAL_REQUIREMENTS option ONLY for a quick checks

# for other purpose (development, test and especially production usage) build/extend Airflow image.

_PIP_ADDITIONAL_REQUIREMENTS: ${_PIP_ADDITIONAL_REQUIREMENTS:-}

volumes:

- ${AIRFLOW_PROJ_DIR:-.}/dags:/opt/airflow/dags

- ${AIRFLOW_PROJ_DIR:-.}/logs:/opt/airflow/logs

- ${AIRFLOW_PROJ_DIR:-.}/config:/opt/airflow/config

- ${AIRFLOW_PROJ_DIR:-.}/plugins:/opt/airflow/plugins

user: "${AIRFLOW_UID:-50000}:0"

depends_on:

&airflow-common-depends-on

redis:

condition: service_healthy

postgres:

condition: service_healthy

services:

#### Non-Airflow related containers ####

#### Airflow related containers ####

postgres:

image: postgres:13

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

volumes:

- postgres-db-volume:/var/lib/postgresql/data

healthcheck:

test: ["CMD", "pg_isready", "-U", "airflow"]

interval: 10s

retries: 5

start_period: 5s

restart: always

ports:

- "5432:5432"

expose:

- "5432"

redis:

image: redis:latest

expose:

- 6379

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 30s

retries: 50

start_period: 30s

restart: always

airflow-webserver:

<<: *airflow-common

command: webserver

ports:

- "8080:8080"

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8080/health"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-scheduler:

<<: *airflow-common

command: scheduler

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8974/health"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-worker:

<<: *airflow-common

command: celery worker

healthcheck:

# yamllint disable rule:line-length

test:

- "CMD-SHELL"

- 'celery --app airflow.providers.celery.executors.celery_executor.app inspect ping -d "celery@$${HOSTNAME}" || celery --app airflow.executors.celery_executor.app inspect ping -d "celery@$${HOSTNAME}"'

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

environment:

<<: *airflow-common-env

# Required to handle warm shutdown of the celery workers properly

# See https://airflow.apache.org/docs/docker-stack/entrypoint.html#signal-propagation

DUMB_INIT_SETSID: "0"

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-triggerer:

<<: *airflow-common

command: triggerer

healthcheck:

test: ["CMD-SHELL", 'airflow jobs check --job-type TriggererJob --hostname "$${HOSTNAME}"']

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-init:

<<: *airflow-common

entrypoint: /bin/bash

# yamllint disable rule:line-length

command:

- -c

- |

function ver() {

printf "%04d%04d%04d%04d" $${1//./ }

}

airflow_version=$$(AIRFLOW__LOGGING__LOGGING_LEVEL=INFO && gosu airflow airflow version)

airflow_version_comparable=$$(ver $${airflow_version})

min_airflow_version=2.2.0

min_airflow_version_comparable=$$(ver $${min_airflow_version})

if (( airflow_version_comparable < min_airflow_version_comparable )); then

echo

echo -e "\033[1;31mERROR!!!: Too old Airflow version $${airflow_version}!\e[0m"

echo "The minimum Airflow version supported: $${min_airflow_version}. Only use this or higher!"

echo

exit 1

fi

if [[ -z "${AIRFLOW_UID}" ]]; then

echo

echo -e "\033[1;33mWARNING!!!: AIRFLOW_UID not set!\e[0m"

echo "If you are on Linux, you SHOULD follow the instructions below to set "

echo "AIRFLOW_UID environment variable, otherwise files will be owned by root."

echo "For other operating systems you can get rid of the warning with manually created .env file:"

echo " See: https://airflow.apache.org/docs/apache-airflow/stable/howto/docker-compose/index.html#setting-the-right-airflow-user"

echo

fi

one_meg=1048576

mem_available=$$(($$(getconf _PHYS_PAGES) * $$(getconf PAGE_SIZE) / one_meg))

cpus_available=$$(grep -cE 'cpu[0-9]+' /proc/stat)

disk_available=$$(df / | tail -1 | awk '{print $$4}')

warning_resources="false"

if (( mem_available < 4000 )) ; then

echo

echo -e "\033[1;33mWARNING!!!: Not enough memory available for Docker.\e[0m"

echo "At least 4GB of memory required. You have $$(numfmt --to iec $$((mem_available * one_meg)))"

echo

warning_resources="true"

fi

if (( cpus_available < 2 )); then

echo

echo -e "\033[1;33mWARNING!!!: Not enough CPUS available for Docker.\e[0m"

echo "At least 2 CPUs recommended. You have $${cpus_available}"

echo

warning_resources="true"

fi

if (( disk_available < one_meg * 10 )); then

echo

echo -e "\033[1;33mWARNING!!!: Not enough Disk space available for Docker.\e[0m"

echo "At least 10 GBs recommended. You have $$(numfmt --to iec $$((disk_available * 1024 )))"

echo

warning_resources="true"

fi

if [[ $${warning_resources} == "true" ]]; then

echo

echo -e "\033[1;33mWARNING!!!: You have not enough resources to run Airflow (see above)!\e[0m"

echo "Please follow the instructions to increase amount of resources available:"

echo " https://airflow.apache.org/docs/apache-airflow/stable/howto/docker-compose/index.html#before-you-begin"

echo

fi

mkdir -p /sources/logs /sources/dags /sources/plugins

chown -R "${AIRFLOW_UID}:0" /sources/{logs,dags,plugins}

exec /entrypoint airflow version

# yamllint enable rule:line-length

environment:

<<: *airflow-common-env

_AIRFLOW_DB_MIGRATE: 'true'

_AIRFLOW_WWW_USER_CREATE: 'true'

_AIRFLOW_WWW_USER_USERNAME: ${_AIRFLOW_WWW_USER_USERNAME:-airflow}

_AIRFLOW_WWW_USER_PASSWORD: ${_AIRFLOW_WWW_USER_PASSWORD:-airflow}

_PIP_ADDITIONAL_REQUIREMENTS: ''

user: "0:0"

volumes:

- ${AIRFLOW_PROJ_DIR:-.}:/sources

airflow-cli:

<<: *airflow-common

profiles:

- debug

environment:

<<: *airflow-common-env

CONNECTION_CHECK_MAX_COUNT: "0"

# Workaround for entrypoint issue. See: https://github.com/apache/airflow/issues/16252

command:

- bash

- -c

- airflow

# You can enable flower by adding "--profile flower" option e.g. docker-compose --profile flower up

# or by explicitly targeted on the command line e.g. docker-compose up flower.

# See: https://docs.docker.com/compose/profiles/

flower:

<<: *airflow-common

command: celery flower

profiles:

- flower

ports:

- "5555:5555"

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:5555/"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

volumes:

postgres-db-volume:

Afterwards, I will create the required Airflow folders and files in the same location:

mkdir -p ./dags ./logs ./plugins ./config

echo -e "AIRFLOW_UID=$(id -u)" > .env

After previous steps, the folder structure will look like this:

└── Airflow

├── config

├── dags

├── docker-compose.yaml

├── .env

├── logs

└── plugins

Once the folder structure is created, we can proceed to initialize Airflow:

docker compose up airflow-init

(More detailed information in here)

3.1.2. Preparing Project

I will start by cding to the location where projects’ repos will reside. Once there I will start by cloning the repositories. In my case, the folder structure will look like this:

└── GitHub

└── project_1

├── dockerfile

├── docker-compose.yml

├── requirements.txt

└── main.py

Example files

dockerfile

FROM python:3.10-bullseye

WORKDIR /app

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

COPY . ./

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "80"]

docker-compose.yml

services:

project_1:

image: project_1

container_name: project_1

volumes:

- ./:/app/downloads

ports:

- "8101:80"

expose:

- "80"

restart: always

main.py

from fastapi import FastAPI

from starlette.responses import RedirectResponse

import datetime

import os

app = FastAPI()

@app.get("/")

def main():

response = RedirectResponse(url='/docs')

return response

@app.get("/create_file")

def create_file():

# Get filename

filename_prefix = 'file'

filename_suffix = str(datetime.datetime.now()).replace(':','').replace('-','').replace(' ','_').split('.')[0]

filename = '_'.join([filename_prefix, filename_suffix]) + '.txt'

filepath = 'downloads'

# Create file

f = open(os.path.join(filepath, filename), "a")

f.write("Hello world!!")

f.close()

return None

requirement.txt

annotated-types==0.6.0

anyio==3.7.1

click==8.1.7

exceptiongroup==1.2.0

fastapi==0.104.1

h11==0.14.0

idna==3.6

pydantic==2.5.2

pydantic-core==2.14.5

sniffio==1.3.0

starlette==0.27.0

typing-extensions==4.8.0

uvicorn==0.24.0.post1

With the repository cloned I will build the image of the project by typing:

docker build -t project_1 .

(Note that I have decided to manually build the images every time something changes as it is the easiest way to manage it this way. Ideally I would like to have an automatic CI/CD workflow setup that will check and deploy the changes once done, but due to the nature of my projects (which most are only developed by me), it wouldn’t add a lot of value.)

3.1.3. Adding a project and selenium to the Docker-compose

With Airflow and the project image ready, it is time to add the the project to the docker-compose.yml file, so it can be started up/shut down at the same time as the other services.

Start by opening the location of the docker-compose.yml. In this file, I will locate the following lines:

services:

#### Non-Airflow related containers ####

#### Airflow related containers ####

postgres:

image: postgres:13

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

...

Once the section where the extra services will be defined is located, I will proceed to add the following lines:

selenium_standalone_chrome:

image: selenium/standalone-chrome

#image: seleniarm/standalone-chromium:latest # Use this when running in an ARM architecture machine (like a Raspberry Pi)

privileged: true

shm_size: 2g

ports:

- "4444:4444"

expose:

- "4444"

project_1:

image: project_1

container_name: project_1

volumes:

- /home/daniel/Documents/Datalake/project_1:/app/downloads

ports:

- "8101:80"

expose:

- "80"

restart: always

The docker-compose.yml file will look like this:

docker-compose.yml# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#

# Basic Airflow cluster configuration for CeleryExecutor with Redis and PostgreSQL.

#

# WARNING: This configuration is for local development. Do not use it in a production deployment.

#

# This configuration supports basic configuration using environment variables or an .env file

# The following variables are supported:

#

# AIRFLOW_IMAGE_NAME - Docker image name used to run Airflow.

# Default: apache/airflow:2.7.3

# AIRFLOW_UID - User ID in Airflow containers

# Default: 50000

# AIRFLOW_PROJ_DIR - Base path to which all the files will be volumed.

# Default: .

# Those configurations are useful mostly in case of standalone testing/running Airflow in test/try-out mode

#

# _AIRFLOW_WWW_USER_USERNAME - Username for the administrator account (if requested).

# Default: airflow

# _AIRFLOW_WWW_USER_PASSWORD - Password for the administrator account (if requested).

# Default: airflow

# _PIP_ADDITIONAL_REQUIREMENTS - Additional PIP requirements to add when starting all containers.

# Use this option ONLY for quick checks. Installing requirements at container

# startup is done EVERY TIME the service is started.

# A better way is to build a custom image or extend the official image

# as described in https://airflow.apache.org/docs/docker-stack/build.html.

# Default: ''

#

# Feel free to modify this file to suit your needs.

---

version: '3.8'

x-airflow-common:

&airflow-common

# In order to add custom dependencies or upgrade provider packages you can use your extended image.

# Comment the image line, place your Dockerfile in the directory where you placed the docker-compose.yaml

# and uncomment the "build" line below, Then run `docker-compose build` to build the images.

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:2.7.3}

# build: .

environment:

&airflow-common-env

AIRFLOW__CORE__EXECUTOR: CeleryExecutor

AIRFLOW__DATABASE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

# For backward compatibility, with Airflow <2.3

AIRFLOW__CORE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__RESULT_BACKEND: db+postgresql://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__BROKER_URL: redis://:@redis:6379/0

AIRFLOW__CORE__FERNET_KEY: ''

AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION: 'true'

AIRFLOW__CORE__LOAD_EXAMPLES: 'false'

AIRFLOW__API__AUTH_BACKENDS: 'airflow.api.auth.backend.basic_auth,airflow.api.auth.backend.session'

# yamllint disable rule:line-length

# Use simple http server on scheduler for health checks

# See https://airflow.apache.org/docs/apache-airflow/stable/administration-and-deployment/logging-monitoring/check-health.html#scheduler-health-check-server

# yamllint enable rule:line-length

AIRFLOW__SCHEDULER__ENABLE_HEALTH_CHECK: 'true'

# WARNING: Use _PIP_ADDITIONAL_REQUIREMENTS option ONLY for a quick checks

# for other purpose (development, test and especially production usage) build/extend Airflow image.

_PIP_ADDITIONAL_REQUIREMENTS: ${_PIP_ADDITIONAL_REQUIREMENTS:-}

volumes:

- ${AIRFLOW_PROJ_DIR:-.}/dags:/opt/airflow/dags

- ${AIRFLOW_PROJ_DIR:-.}/logs:/opt/airflow/logs

- ${AIRFLOW_PROJ_DIR:-.}/config:/opt/airflow/config

- ${AIRFLOW_PROJ_DIR:-.}/plugins:/opt/airflow/plugins

user: "${AIRFLOW_UID:-50000}:0"

depends_on:

&airflow-common-depends-on

redis:

condition: service_healthy

postgres:

condition: service_healthy

services:

#### Non-Airflow related containers ####

selenium_standalone_chrome:

image: selenium/standalone-chrome

#image: seleniarm/standalone-chromium:latest # Use this when running in an ARM architecture machine (like a Raspberry Pi)

privileged: true

shm_size: 2g

ports:

- "4444:4444"

expose:

- "4444"

project_1:

image: project_1

container_name: project_1

volumes:

- /home/daniel/Documents/Datalake/project_1:/app/downloads

ports:

- "8101:80"

expose:

- "80"

restart: always

#### Airflow related containers ####

postgres:

image: postgres:13

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

volumes:

- postgres-db-volume:/var/lib/postgresql/data

healthcheck:

test: ["CMD", "pg_isready", "-U", "airflow"]

interval: 10s

retries: 5

start_period: 5s

restart: always

ports:

- "5432:5432"

expose:

- "5432"

redis:

image: redis:latest

expose:

- 6379

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 30s

retries: 50

start_period: 30s

restart: always

airflow-webserver:

<<: *airflow-common

command: webserver

ports:

- "8080:8080"

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8080/health"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-scheduler:

<<: *airflow-common

command: scheduler

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8974/health"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-worker:

<<: *airflow-common

command: celery worker

healthcheck:

# yamllint disable rule:line-length

test:

- "CMD-SHELL"

- 'celery --app airflow.providers.celery.executors.celery_executor.app inspect ping -d "celery@$${HOSTNAME}" || celery --app airflow.executors.celery_executor.app inspect ping -d "celery@$${HOSTNAME}"'

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

environment:

<<: *airflow-common-env

# Required to handle warm shutdown of the celery workers properly

# See https://airflow.apache.org/docs/docker-stack/entrypoint.html#signal-propagation

DUMB_INIT_SETSID: "0"

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-triggerer:

<<: *airflow-common

command: triggerer

healthcheck:

test: ["CMD-SHELL", 'airflow jobs check --job-type TriggererJob --hostname "$${HOSTNAME}"']

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-init:

<<: *airflow-common

entrypoint: /bin/bash

# yamllint disable rule:line-length

command:

- -c

- |

function ver() {

printf "%04d%04d%04d%04d" $${1//./ }

}

airflow_version=$$(AIRFLOW__LOGGING__LOGGING_LEVEL=INFO && gosu airflow airflow version)

airflow_version_comparable=$$(ver $${airflow_version})

min_airflow_version=2.2.0

min_airflow_version_comparable=$$(ver $${min_airflow_version})

if (( airflow_version_comparable < min_airflow_version_comparable )); then

echo

echo -e "\033[1;31mERROR!!!: Too old Airflow version $${airflow_version}!\e[0m"

echo "The minimum Airflow version supported: $${min_airflow_version}. Only use this or higher!"

echo

exit 1

fi

if [[ -z "${AIRFLOW_UID}" ]]; then

echo

echo -e "\033[1;33mWARNING!!!: AIRFLOW_UID not set!\e[0m"

echo "If you are on Linux, you SHOULD follow the instructions below to set "

echo "AIRFLOW_UID environment variable, otherwise files will be owned by root."

echo "For other operating systems you can get rid of the warning with manually created .env file:"

echo " See: https://airflow.apache.org/docs/apache-airflow/stable/howto/docker-compose/index.html#setting-the-right-airflow-user"

echo

fi

one_meg=1048576

mem_available=$$(($$(getconf _PHYS_PAGES) * $$(getconf PAGE_SIZE) / one_meg))

cpus_available=$$(grep -cE 'cpu[0-9]+' /proc/stat)

disk_available=$$(df / | tail -1 | awk '{print $$4}')

warning_resources="false"

if (( mem_available < 4000 )) ; then

echo

echo -e "\033[1;33mWARNING!!!: Not enough memory available for Docker.\e[0m"

echo "At least 4GB of memory required. You have $$(numfmt --to iec $$((mem_available * one_meg)))"

echo

warning_resources="true"

fi

if (( cpus_available < 2 )); then

echo

echo -e "\033[1;33mWARNING!!!: Not enough CPUS available for Docker.\e[0m"

echo "At least 2 CPUs recommended. You have $${cpus_available}"

echo

warning_resources="true"

fi

if (( disk_available < one_meg * 10 )); then

echo

echo -e "\033[1;33mWARNING!!!: Not enough Disk space available for Docker.\e[0m"

echo "At least 10 GBs recommended. You have $$(numfmt --to iec $$((disk_available * 1024 )))"

echo

warning_resources="true"

fi

if [[ $${warning_resources} == "true" ]]; then

echo

echo -e "\033[1;33mWARNING!!!: You have not enough resources to run Airflow (see above)!\e[0m"

echo "Please follow the instructions to increase amount of resources available:"

echo " https://airflow.apache.org/docs/apache-airflow/stable/howto/docker-compose/index.html#before-you-begin"

echo

fi

mkdir -p /sources/logs /sources/dags /sources/plugins

chown -R "${AIRFLOW_UID}:0" /sources/{logs,dags,plugins}

exec /entrypoint airflow version

# yamllint enable rule:line-length

environment:

<<: *airflow-common-env

_AIRFLOW_DB_MIGRATE: 'true'

_AIRFLOW_WWW_USER_CREATE: 'true'

_AIRFLOW_WWW_USER_USERNAME: ${_AIRFLOW_WWW_USER_USERNAME:-airflow}

_AIRFLOW_WWW_USER_PASSWORD: ${_AIRFLOW_WWW_USER_PASSWORD:-airflow}

_PIP_ADDITIONAL_REQUIREMENTS: ''

user: "0:0"

volumes:

- ${AIRFLOW_PROJ_DIR:-.}:/sources

airflow-cli:

<<: *airflow-common

profiles:

- debug

environment:

<<: *airflow-common-env

CONNECTION_CHECK_MAX_COUNT: "0"

# Workaround for entrypoint issue. See: https://github.com/apache/airflow/issues/16252

command:

- bash

- -c

- airflow

# You can enable flower by adding "--profile flower" option e.g. docker-compose --profile flower up

# or by explicitly targeted on the command line e.g. docker-compose up flower.

# See: https://docs.docker.com/compose/profiles/

flower:

<<: *airflow-common

command: celery flower

profiles:

- flower

ports:

- "5555:5555"

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:5555/"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

volumes:

postgres-db-volume:

Finally, I test that everything is working by typing:

docker compose up

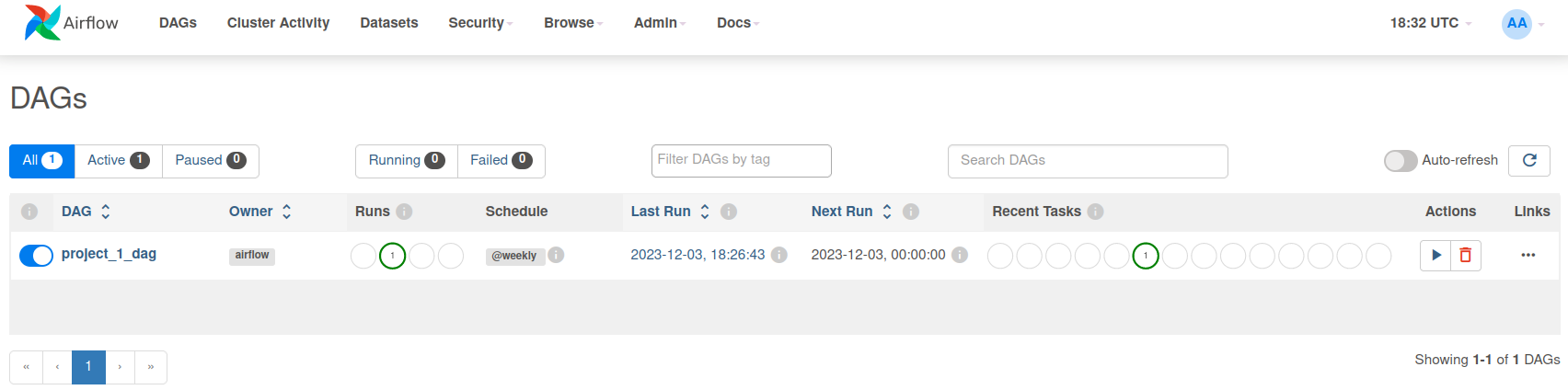

3.1.4. Adding customs dags to Airflow

With the services running, it is time to add the a DAG to Airflow. In order to do so, I just need to add the following dag file to the dag folder.

project_1_dag.py

from airflow import DAG

from airflow.operators.python import PythonOperator, BranchPythonOperator

from airflow.operators.bash import BashOperator

from datetime import datetime

import requests

PORT = 80

def call_api(url: str):

response = requests.get(url)

if not (200<=response.status_code<=299):

raise Exception('Something went wrong.')

def _create_file():

url = f"http://project_1:{PORT}/create_file"

call_api(url)

with DAG("project_1_dag", # Dag id

start_date=datetime(2023, 10 ,5), # start date, the 1st of January 2023

schedule='@weekly', # Cron expression, here @daily means once every day.

catchup=False):

# Tasks are implemented under the dag object

create_file = PythonOperator(

task_id="create_file",

python_callable=_create_file

)

create_file

Notice how as all the services belong to the same network, I can call each service by typing the service’s name directly: "http://project_1:{PORT}/create_file".

3.2. Running the services

With everything ready, starting and shutting down everything becomes extremely simple. It only requires of typing the following in the location of my docker-compose.yml file:

docker compose up

Just for explanation’s shake, this is how my current setup looks like:

├── Airflow

│ ├── config

│ ├── dags

│ ├── docker-compose.yaml

│ ├── .env

│ ├── logs

│ └── plugins

└── GitHub

│ ├── project_1

│ ├── project_2

│ └── project_3

└── Datalake

├── project_1

├── project_2

└── project_3

4. Troubleshooting

Although most services should be accessible via a browser (the docker ports will be forwarded to host’s ports), there may be situations in which they won’t work. For these instances, it is a good idea to have a troubleshooting docker image ready that could connect to the troubling containers. I like to use the nicolaka/netshoot image to troubleshoot as it contains most of the tools you will need when troubleshooting network related issues.

To troubleshoot an already running docker container, attach the netshoot container to that container’s network by typing:

docker run -it --net container:<container_name> nicolaka/netshoot

Notes on Airflow

For simplicity shake, the airflow logs and dags are located in the same directory as the compose file. This may become an issue if a heavy use of Airflow is done (as it logs will start taking too much space). Having dags into the same location is also not ideal, as there is no easy way on putting those dags under version control. However, for my use cases, these both issues are very unlikely, so I decided to go for the easier setup.

Above points will be considered (if/when) my new micro-service centered architecture is setup. As the main idea behind that architecture would be to increase the scalability and fault-tolerant of the system.